-

Welcome to the Community Forums at HiveWire 3D! Please note that the user name you choose for our forum will be displayed to the public. Our store was closed as January 4, 2021. You can find HiveWire 3D and Lisa's Botanicals products, as well as many of our Contributing Artists, at Renderosity. This thread lists where many are now selling their products. Renderosity is generously putting products which were purchased at HiveWire 3D and are now sold at their store into customer accounts by gifting them. This is not an overnight process so please be patient, if you have already emailed them about this. If you have NOT emailed them, please see the 2nd post in this thread for instructions on what you need to do

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I Just Wanted to Post an Image Thread

- Thread starter Stezza

- Start date

Yes, I remember you mentioning that about PSP. Unfortunately my copy is very old, as I quit updating it around version 9, and only have version 7 installed, which I use for quick screenshots and such.

This is rather new even in PaintShop. They first introduced the new AI tools in the 2022 version, and then improved and expanded them a bit in the 2023 version.

mmm... I got this lol

Yes, 2 of the main issues with generative AI are:

- Image coherence: For example, it doesn't know trees only grow on the ground, so we have to tell it explicitly. It knows hands have fingers, but doesn't know how many. It knows humanoid robots have arms and legs, but doesn't know how many, and that they need to be attached to the torso. All those things can be fixed over time with extra model training.

- Natural language interpretation: When reading text prompts, it sometimes doesn't understand the order of things (like in your image), or notions of placement, like "on top of", or "on the right side of". It usually doesn't get those things right, but it will be fixed over time. I believe Dall-E 2 has already fixed it. The model just needs more training, and this takes time.

- Illiteracy: Most generative AIs need to be taught how to write. This has been fixed in Imagen, but last I've seen, Dall-E 2 still turns text into gibberish squabbles. Same with StableDiffusion and many others.

Saphirewild

Brilliant

Made this fun render with mix and match clothes from GF2!

Love the render!

Are you using DS? Hopefully you don't mind if I make a suggestion?

On the top in the middle where it looks a bit jagged, this can be fixed by playing with the smooth settings if you have a smooth modifier applied.

Also if you add a dforce modifier to it and simulate the shoulder straps will then sit on the shoulders. Hope you don't mind the suggestions. Great render!

Are you using DS? Hopefully you don't mind if I make a suggestion?

On the top in the middle where it looks a bit jagged, this can be fixed by playing with the smooth settings if you have a smooth modifier applied.

Also if you add a dforce modifier to it and simulate the shoulder straps will then sit on the shoulders. Hope you don't mind the suggestions. Great render!

There's an article today in several newspapers about someone who won 1st place in an art contest at a state fair in Colorado using Midjourney. Seems quite a few folks are upset by it.

He used AI to win a fine-arts competition. Was it cheating?

He used AI to win a fine-arts competition. Was it cheating?

There's an article today in several newspapers about someone who won 1st place in an art contest at a state fair in Colorado using Midjourney. Seems quite a few folks are upset by it.

He used AI to win a fine-arts competition. Was it cheating?

And they only found out because the guy admitted using AI-generated images. He could had gotten away with it, but wanted to make a point.

parkdalegardener

Adventurous

For an open source stand alone ai upscaler try Video2X. It will batch process but will also clean out whatever temp location you use when it's done so be careful if you use the same cache for everything

parkdalegardener

Adventurous

So; I downloaded StableDiffusion and installed it locally. I agreed I would not use it to make questionable content and it agreed not to render usable language to screen. Fair deal. I ran a test sample prompt in StableDiffusion with a little confusion in the wording trying to be a smart a$$ and see what comes up. I asked it for 5 images and it gave me 5 images. Well that's to be expected. Got what I asked for. The interesting part is that the "AI" seems to want them for itself. No debating copywrite; 4 of the images came with StableDiffusion's AI claiming them as it's own. After all it signed them in the lower left. OK, so there is some tongue in cheek humour there but I did notice something in 4 of these 5 images.

I just thought it interesting in that the discussion of intellectual property needs to expand. Is the AI sampling styles and determining that some form of signature is part of artistic expression and as such needs to be part of an output image, even if it is in unrecognizable script; or is the AI deciding that it is responsible for the work so it gets a sig of it's own?

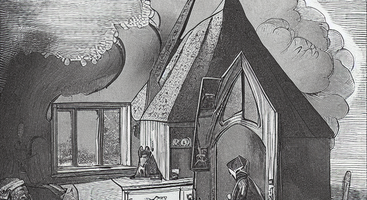

The text that got this result? "the small english cottage had a chimney that belched out coloured smoke, which failed to hide the witch inside; peering outward through the oval framed window"

I just thought it interesting in that the discussion of intellectual property needs to expand. Is the AI sampling styles and determining that some form of signature is part of artistic expression and as such needs to be part of an output image, even if it is in unrecognizable script; or is the AI deciding that it is responsible for the work so it gets a sig of it's own?

The text that got this result? "the small english cottage had a chimney that belched out coloured smoke, which failed to hide the witch inside; peering outward through the oval framed window"

Attachments

By default Stable Diffusion adds an invisible watermark to the generated images, which are actually metadata tags, nothing on the image itself. It has also a self-imposed censorship of R-rated images. However, since this is open source, all of that can be disabled if you know your way around Python. In my location version, I have disabled all of it.

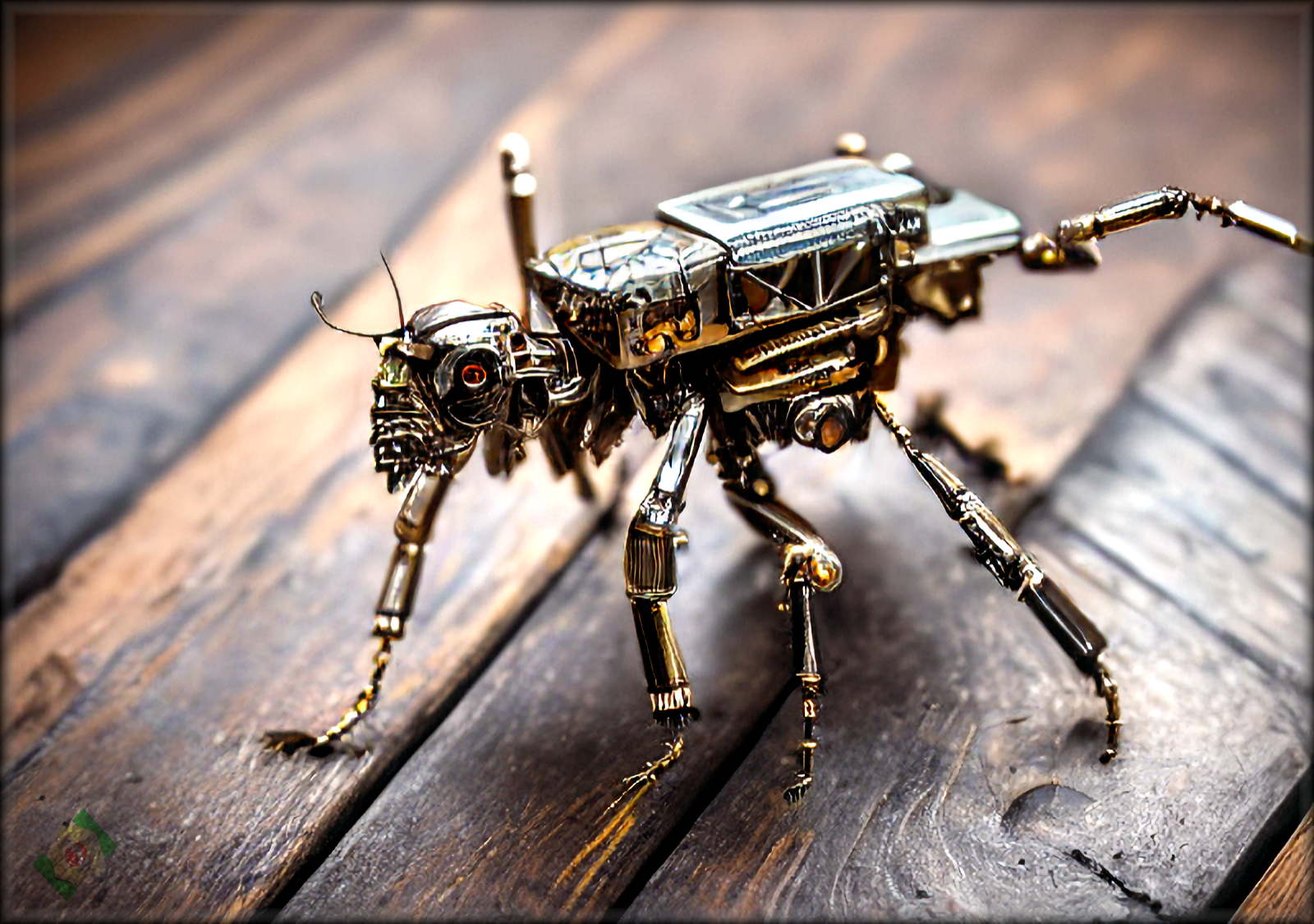

Here's a macro photography done with Stable Diffusion of a very small robot insect.

Here's a macro photography done with Stable Diffusion of a very small robot insect.