That looks Powerful.

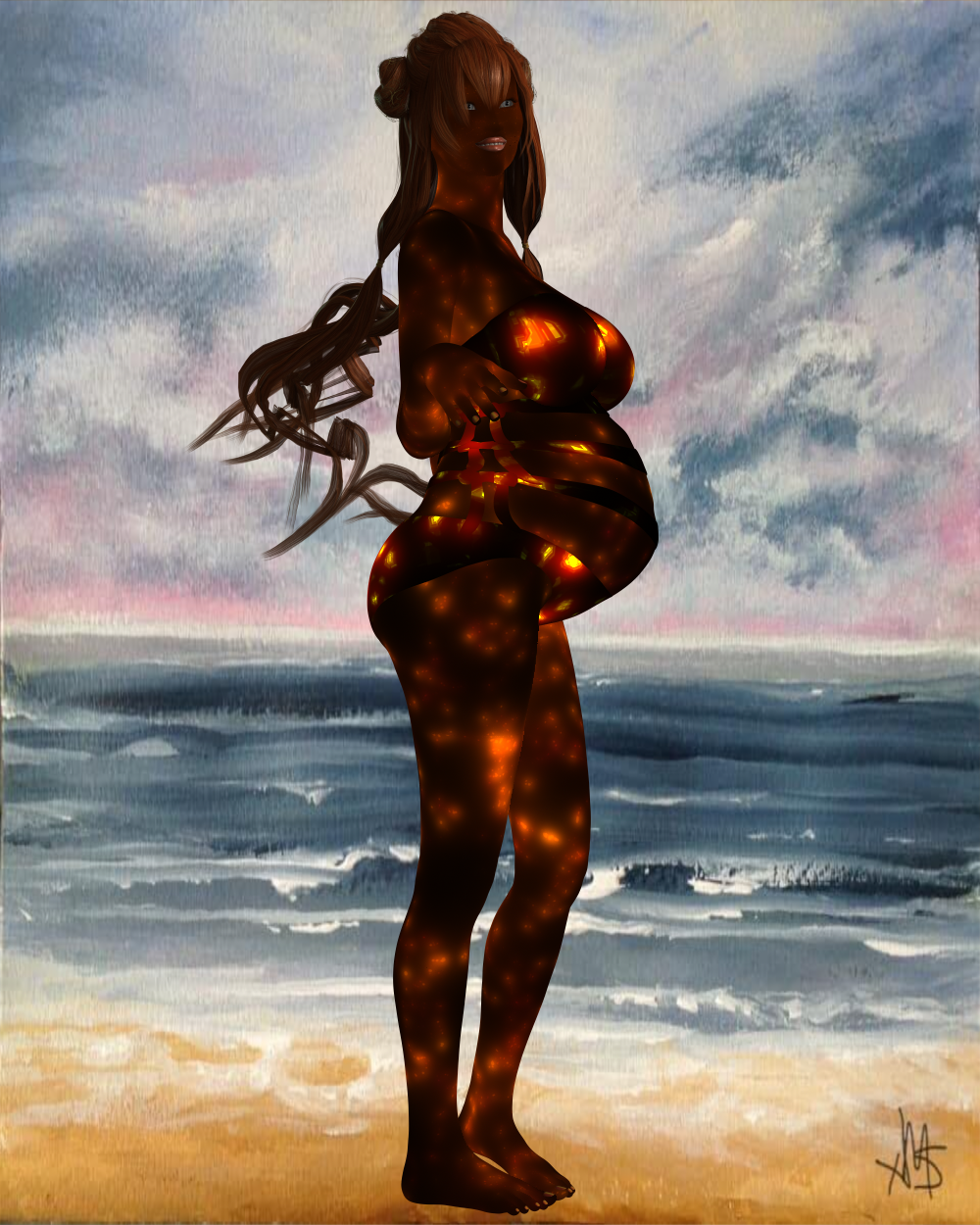

It is indeed, but I still have to get the hang of it. Had to redo the hands multiple times (an SD thing), and it confuses the tail with all sorts of things. I have hopes to figure these things out with more practice. ^^

That looks Powerful.

ControlNet. Very powerful indeed and very easy to train. Makes my old 3D program to Stable Diffusion render pipeline a little easier as it does a better job of depth maps. Canny edge detection along with the depth mapping is very powerful indeed. Throw in openpose and openpose_hand (which still isn't real great) and there is really no more rolling of the dice. A person should be quite able to control the output. I've posted workflow for these tools but am loath to give a URL as it is a competing site to Hivewire and I don't want to fend off the rabid mob of anti-ai people that cruise Rendo. It doesn't have to be Poser, Blender, DS, Makehuman, or anything else specific to start with, so each of the fanboys/gurlz for any given program can take a swak at the AI user with great joy. Ah the glory of social media and the ability to be a troll without hiding under a bridge.

Get a handle on ControlNet. Pose control now includes hands. Perfect? No. Damn good? You bet. The ability to use these networks on any 1.4 or 1.5 model makes the ControlNet framework very powerful. Multiple figure interaction is possible using manikins from your 3d modeling software of choice for the pose control. No model merging necessary. It does it on the fly. Non destructive. SD 2.x support shortly. TenCent has just released their version of the ControlNet training yesterday. Seems to use similar methods though they also say it should recognize animal poses. Haven't had a chance to run it yet. I suspect that riggify in Blender or skeleton figures in Poser/DS would actually work well with this.Someone suggested ControlNet at FaceBook last night, AFTER I posted this image. I have played with it for a few hours, but it couldn't figure out what to do with tails. It confuses it with extra arms, legs, or even furniture framing. It couldn't figure the pose because the tail was confusing it. I need to practice more to see how to handle the different models and parameters.

Yeah, I've got my share of trolling at both Rendo and FB anti-AI advocates. They come in lynching mobs, attacking artists in large groups with all sorts of personal attacks, and sometimes claiming absurd things about AI, showing they don't understand what they are attacking. It's Ok to oppose something, but they have to have a valid argument. Most of what they claim is simply not true - but have to you tried having a rational conversation with lynching mobs?

Get a handle on ControlNet.

With the addition of tools coming at the rate they are, none of the bogus reasoning and pseudoscience will really have a leg to stand upon in the near future.

well Plask.ai has a free level and I have not found it bad for BVH with legacy figures in DAZ studio, Genesis+ just gets worse foot sliding wise each generation whatever the BVH format or conversion method, in DAZ studio at least, they work OK in iClone, Unreal etcAwh; now I had to look for the Make Art button. It's gone!! I hadn't thought about it in so long I forgot that joke was in Poser.

As for animation, I suspect that with a little work BVH can transfer to Pose Control. When I first started working with openCV a couple years ago I was working with pose estimation and joining that with Kinect camera skeletons and depth mapping. The tools are now more advanced and openCV_python has advanced quite a bit as well.

Three of Tencent's nets are now functioning with ControlNet yamls and a Gradio interface. It's really hard to keep up with indeed. No sooner do I finish reading a white paper and someone's already jumped on it.

you mean like ControlNet OpenPose?You misunderstand what I am saying about BVH files. I am not talking about using them to manipulate 3d figures. I am talking about using BVH directly in generative AI. The pose estimation in computer vision applications outputs what is essentially a BVH skeleton. When you do animation using i2i, consistency only exists at very low denoising levels. This makes for very little change from the input video. Over the weekend; I have to go out shortly so I haven't started yet, I want to see if I can use BVH files directly, or with a little re-ordering; as the input to guide the diffusion.

The batch processing limitation is not an issue if you run locally. I have been running batches since I installed the Nets. I haven't had the time to batch the Tencent implementations for sketch input yet but I have no problem running their Nets on my machines.

I know both the video presenters you mention, but by the time they present something I've already read the white paper and am working with it locally. After all; both of them predicted the death of AI with the lawsuits and again stated it's demise with the release of 2 and 2.1. I haven't looked at their channels in a couple of weeks though so I don't know how they are doing right now. Both have good info.